AI Risk Management for Leaders: Building Clarity, Oversight, and Assurance

Last Updated: December 11, 2025

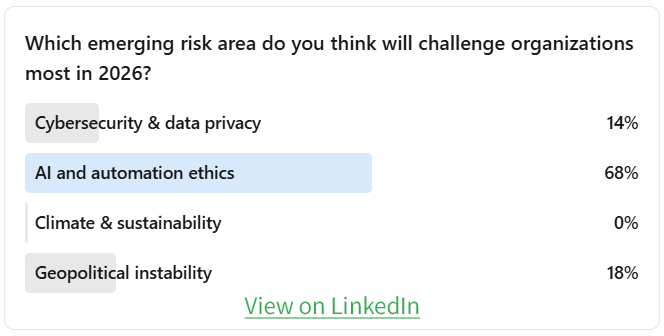

Recently, we asked risk leaders which emerging risk will challenge organizations the most in 2026. The response was overwhelming. Sixty-eight percent chose AI and automation ethics, far outpacing cybersecurity, geopolitical instability, and every other category. Leaders are clearly feeling the uncertainty, the pressure, and the rapid pace of change AI is introducing. Their message is simple: AI risk is no longer a distant concern. It is the risk shaping how organizations think, plan, and operate today.

AI risk management is no longer optional. If you adopt AI without a structured, risk-based strategy, you are not accelerating innovation. You are accelerating uncertainty. AI introduces new layers of complexity, new categories of exposure, and new operational dependencies that organizations are rarely prepared to manage.

This guide explains what AI risk really is, the main types of AI risk, how to apply a risk-based approach, and how Enterprise Risk Management and LogicManager’s Risk Ripple Intelligence help you stay ahead of complexity rather than react to it.

What is AI Risk Management?

AI risk is a measure of:

How likely a potential AI-related threat is to affect your organization and how much damage that threat could create.

Managing AI risk means looking beyond flashy capabilities and asking harder questions:

- What could go wrong

- How far the impact could spread

- Which systems, people, and processes would feel it

- What it would take to detect and contain the issue

A risk-based approach is not about slowing innovation. It is about ensuring AI adoption creates value without creating blind spots that undermine your strategy.

Categories of AI Risk

AI risks show up in headlines, audits, lawsuits, and operations every day. These risks are already real, measurable, and consequential.

1. Data Risks: AI as a Data Exposure Multiplier

AI systems ingest enormous amounts of information, often sensitive and often dispersed across the organization.

Recent examples highlight the scale of exposure:

- A report found that 84% of AI tools had experienced data breaches, and about half were exposed to credential theft as employees adopted AI tools without proper oversight. (Cybernews)

- Another analysis described AI as a “data-breach time bomb,” finding that 99% of organizations had exposed data that AI tools could access across cloud and SaaS systems. (BleepingComputer)

- A government AI contractor exposed around 550 GB of data, including AI training datasets and employee credentials, due to misconfigured databases, making it possible not only to steal data but also to tamper with training data itself. (Axios)

- OpenAI recently confirmed that a breach at analytics provider Mixpanel exposed some users’ account names, email addresses, and browser locations tied to their AI API usage, raising targeted phishing risks. (Decrypt)

Data risk expands rapidly when you do not have clarity on what data AI tools can touch and how it can be exposed.

2. Model Risks: Hallucinations, Bias, Drift, and Manipulation

AI models do not make loud mistakes that appear confident, credible, and sometimes catastrophic.

Examples include:

- Multiple lawsuits have targeted generative AI systems over defamatory hallucinations, where models allegedly invented false accusations about individuals. Courts have allowed some of these claims to move forward, signaling that model outputs can create real legal exposure. (Ballard Spahr)

- Another ruling favored OpenAI because of documented design choices and transparency practices, showing how poor documentation increases legal exposure. (Technology & Marketing Law Blog)

Model risk emerges when you do not understand how the model works, where it fails, or how quickly it can drift from acceptable performance.

3. Operational Risks: AI Fragility and Outages

AI-driven workflows create new operational dependencies. When AI fails, the processes that rely on it fail too.

A notable example:

- A major OpenAI outage on December 26, 2024 brought down ChatGPT, Sora, and the API for hours, disrupting millions of users and every organization that built services on top of the platform. (Daily Security Review)

Operational risk grows as organizations embed AI into core workflows without creating fallback plans, redundancy, or clarity on what to do when the system is unavailable.

4. Ethical and Legal Risks: Copyright, Consent, and Misuse

AI’s legal landscape is evolving rapidly, and organizations are discovering their exposure after incidents occur.

Recent examples include:

- Authors sued Anthropic, alleging it used pirated books to train its models, including content from the controversial Books3 dataset. (The Verge)

- French publishers and authors sued Meta, accusing it of illegally using copyrighted works to train its generative AI and raising concerns under the EU’s Artificial Intelligence Act. (AP News)

- Figma faces a proposed class action claiming it misused customer designs to train AI features without proper consent. (Reuters)

- Courts and regulators are increasingly finding that training on copyrighted works without permission may not qualify as “fair use” for commercial AI systems. (Techreport)

Ethical and legal risks rise when AI decisions affect people, rights, and compliance obligations without proper oversight.

5. Workforce and Organizational Risks: Overreliance on Automation

Many organizations rush to replace employees with AI before understanding the risk. This is one of the most hidden but destructive forms of AI risk.

When human teams disappear, so do:

- Institutional knowledge

- Oversight and challenge functions

- Control owners and reviewers

- Ethical judgment and context

- Cross-functional communication

Replacing people too quickly introduces new points of failure and erodes oversight across your risk environment.

How to Apply a Risk-Based Approach to AI

A risk-based approach creates structure in an environment defined by uncertainty. It gives AI programs the oversight, discipline, and consistency they need to create value without introducing unrecognized exposure.

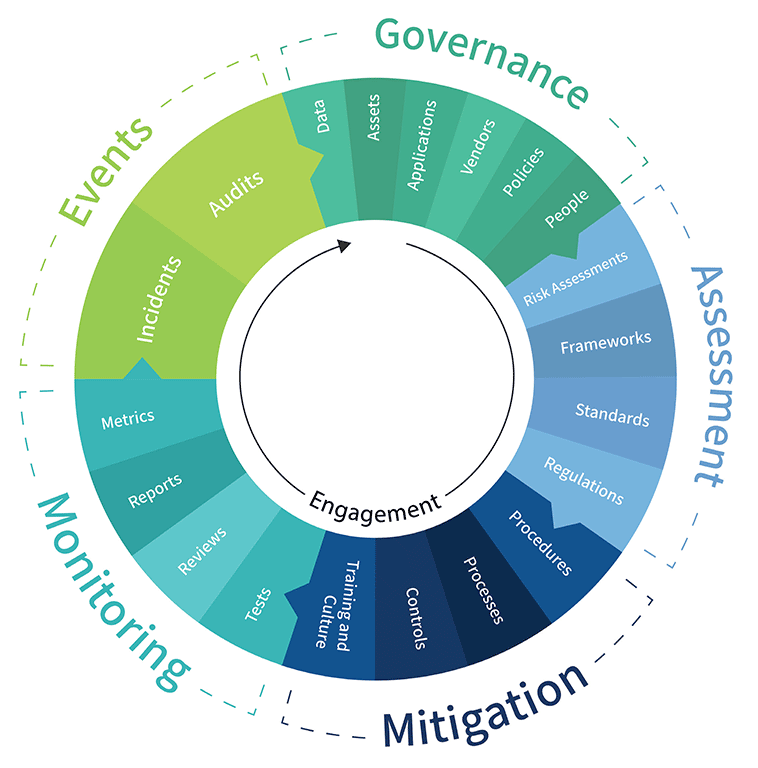

LogicManager’s philosophy aligns with five steps of the Risk Wheel: Oversight, Assess, Mitigate, Monitor, and Events.

Step 1: Oversight – Build an AI Inventory That Makes the Invisible Visible

Oversight begins with clarity. You cannot manage AI risk until you can see your full AI footprint. This starts with a complete inventory of all AI systems, tools, automations, models, and shadow AI.

For each AI use case, document:

- What the AI system does

- What data it touches

- What decisions or processes it affects

- Who owns it and who can change it

- Inputs, outputs, and dependencies

- Third parties involved

This becomes the foundation of your AI oversight program. Oversight means you understand where AI lives, how it works, and how it connects to the rest of your operations.

Step 2: Assess – Identify Every AI Risk and Evaluate Impact, Likelihood, and Assurance

Identify each potential risk your AI use case introduces, such as:

- Bias and discrimination

- Data privacy and confidentiality exposure

- Model drift and performance degradation

- Incorrect or misleading outputs

- Regulatory non-compliance

- Adversarial manipulation or prompt injection

- Vendor outages or reliability concerns

- Misuse of synthetic content

- Workforce displacement risk

- Loss of institutional knowledge

- Ethical concerns about transparency or consent

Then conduct structured risk assessments that evaluate:

- Impact: How severe the consequences would be if the risk occurred

- Likelihood: How probable the risk is based on evidence and environment

- Assurance: How confident you are that existing controls can manage the exposure

Tip: Assign tasks to risk owners so assessments are completed regularly and consistently. This ensures oversight scales with your AI footprint instead of falling behind it.

Step 3: Mitigate – Design Targeted Controls That Address the Risks You Identified

Mitigation should be direct, specific, and tied to the risk itself.

Here are some examples of controls you may want to implement:

- For bias: model validation, fairness testing, human review thresholds

- For data privacy: access controls, prompt filtering, data minimization

- For model drift: monitoring policies, retraining schedules, change logs

- For operational dependency: redundancy, fallback procedures, runbooks

- For non-compliance: explainability requirements, documentation standards

- For workforce displacement: role clarity, RACI updates, training and upskilling

Mitigation translates insight into action. When done correctly, it reduces uncertainty and strengthens assurance.

Step 4: Monitor – Ensure Your Controls Are Effective as AI and the Business Evolve

AI changes faster than policies, teams, or systems. Monitoring is how you make sure your controls continue to work as intended. It verifies that the safeguards you put in place are still effective, still relevant, and still capable of managing the risks you identified.

Effective monitoring looks for:

-

Performance changes in AI systems

-

Unexpected or inconsistent outputs

-

Emerging legal or regulatory requirements

-

Shifts in vendor reliability or service levels

-

Data drift or degraded data quality

-

Incidents, near misses, and operational anomalies

-

Gaps between how AI was designed to work and how it is actually being used

Monitoring turns controls into living, active components of your oversight program. It gives you early warning signals that a risk is increasing, a control is weakening, or a new exposure is developing. Monitoring ensures oversight is not a one-time exercise but an ongoing discipline rooted in evidence and assurance.

Step 5: Events – Use Incidents and Opportunities to Improve the Program

Every AI incident, misclassification, outage, or compliance question is an opportunity to strengthen your oversight.

Events should trigger:

- Root cause analysis

- Updates to the AI inventory

- Control improvements

- Policy adjustments

- New assessment tasks

- Cross-functional conversations

- Board-level reporting

Events are signals that reveal how your oversight can evolve. Strong programs treat events as catalysts for continuous improvement rather than reputational damage to be hidden.

How LogicManager and Risk Ripple Intelligence Strengthen AI Risk Oversight

Managing AI risk is really about managing complexity. AI expands the number of systems involved, the volume of data moving across the organization, and the number of decisions happening without human involvement. This complexity introduces uncertainty unless you have a clear structure for understanding it.

LogicManager gives you that structure.

A Centralized Source of Truth That Replaces Guesswork With Confidence

Most organizations struggle because AI oversight is fragmented. Each department sees only its small piece of the puzzle. LogicManager brings the entire AI environment together so leaders finally get a complete picture.

Every AI system, every dataset, every process, every dependency, every control, and every owner comes together in one connected view. That is how you move from siloed assumptions to enterprise-level clarity.

A centralized source of truth is the foundation for assurance. When leaders ask: “What are we using AI for, where are the risks, and what exposures matter most?” you can give answers backed by connected evidence, not guesswork.

Risk Ripple Intelligence Reveals Impact and Root Cause Before Issues Escalate

AI incidents rarely happen in isolation. A data leak can trigger a compliance violation. A model error can misclassify thousands of customers. An outage can stall an entire business process.

The first symptom is rarely the true source of the problem.

Risk Ripple Intelligence shows how risks move across your organization. It reveals the downstream consequences of a single AI failure and, just as importantly, highlights the upstream root cause that created the exposure in the first place.

This is not reactive analysis. It is proactive insight that lets you strengthen vulnerabilities before they turn into incidents.

Bridging Silos So Oversight Matches Reality

AI does not live in one department. It crosses IT, operations, compliance, HR, customer service, and more. If oversight remains siloed, AI risk will always slip through the cracks.

LogicManager bridges these gaps by connecting AI use cases to the people, processes, and responsibilities that rely on them. This alignment ensures that every stakeholder understands:

- What they own

- Where the exposures are

- What happens if something fails

Bridging silos is what creates reliable oversight and meaningful assurance.

Creating the Assurance Leaders Expect

Executives and boards are asking for assurance. They want to know:

- That AI is being used responsibly

- That risks are understood before decisions are made

- That the organization can withstand failures

- That issues will be identified quickly and addressed at the root

- That oversight is connected, complete, and defensible

LogicManager delivers this by giving organizations clarity, connectedness, and risk intelligence they can rely on.

The Bottom Line

AI forces every organization to confront a simple truth: complexity has been building silently for years, and AI accelerates it. The organizations that succeed will be the ones mature enough to look in the mirror and acknowledge the gaps that already exist.

Ask yourself:

- Do you really know where AI is operating in your business?

- Can you see the downstream consequences of a single model failure?

- Are you confident your teams understand their roles in AI oversight?

- If a board member asked for assurance today, would you have an answer or a collection of opinions?

AI does not wait for you to get your house in order. It magnifies whatever oversight weaknesses are already there.

The opportunity is clear. With a risk-based approach and Risk Ripple Intelligence, you can bring that complexity under control, transform uncertainty into insight, and build the confidence your leaders expect.

But only if you take the first step now.